I am a first-year Ph.D. student in Computer Science at Iowa State University, supervised by Professor Yang Li. I earned my M.S. and B.Eng. in Computer Science from Zhejiang University, where I was advised by Professor Zhou Zhao. My research focuses on Multimodal Learning, Large Language Models, and Computer Vision.

📝 Publications

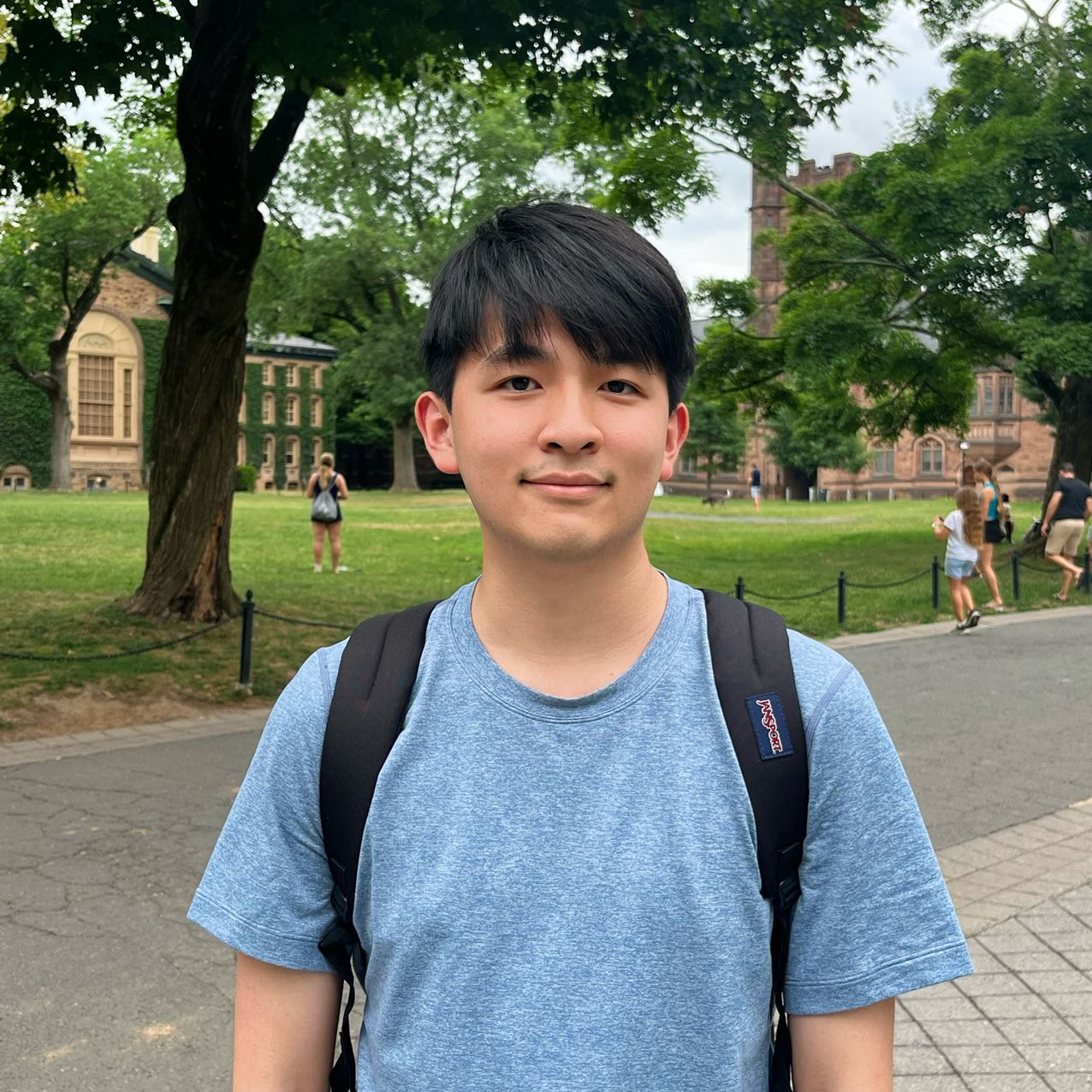

RoboGround: Robotic Manipulation with Grounded Vision-Language Priors.

Haifeng Huang, Xinyi Chen, Yilun Chen, Hao Li, Xiaoshen Han, Zehan Wang, Tai Wang, Jiangmiao Pang, Zhou Zhao

- Create a large-scale simulated robotic manipulation dataset with a diverse set of objects and instructions (24K demonstrations, 112K instructions, and 3,526 unique objects from 176 categories).

- Develop a grounding-aware robotic manipulation policy that leverages grounding masks as an intermediate representation to guide policy networks in object manipulation tasks.

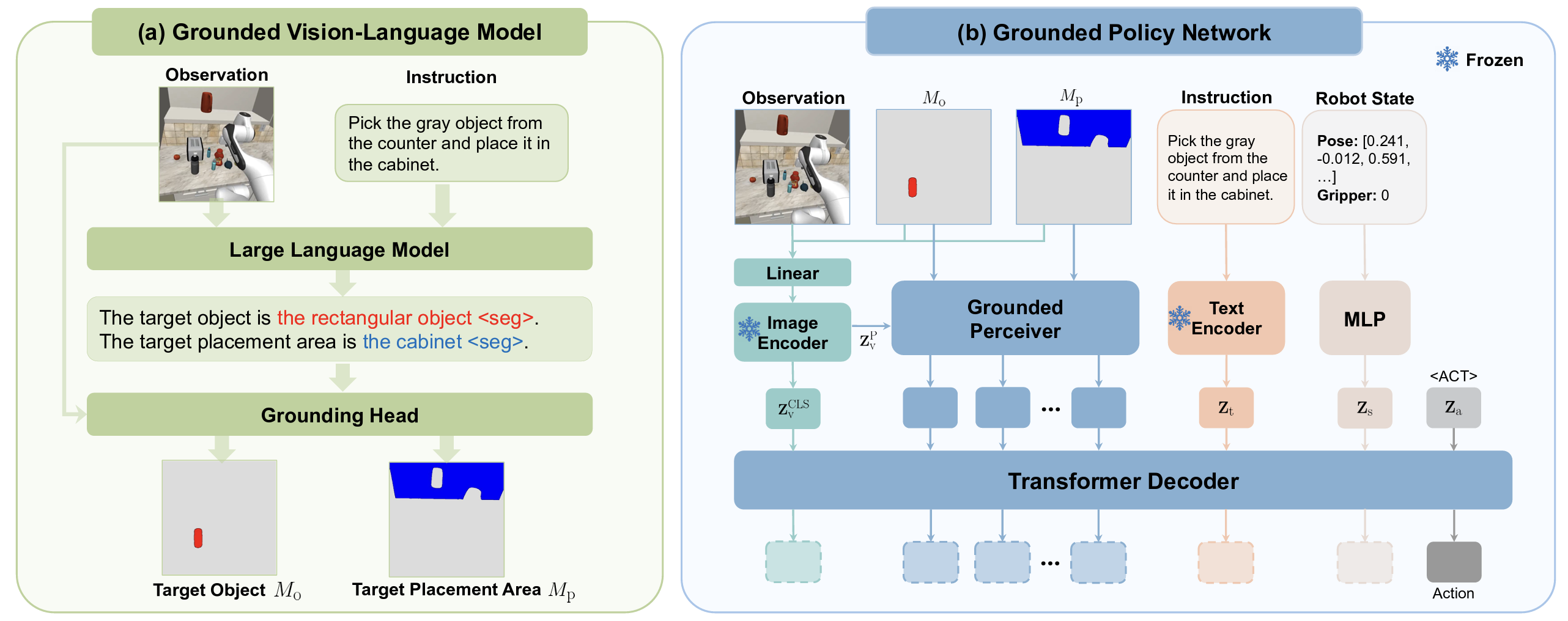

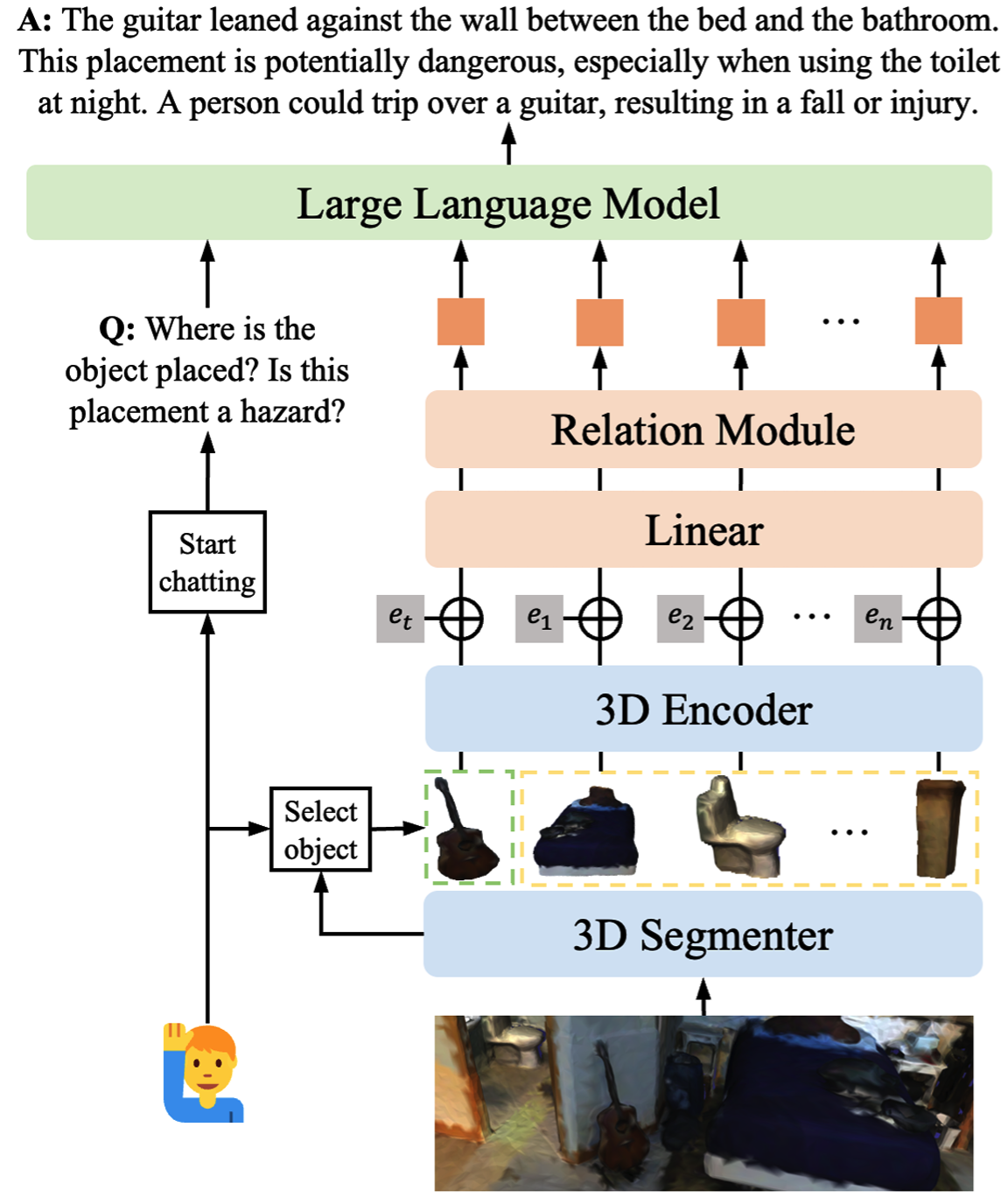

Chat-Scene: Bridging 3D Scene and Large Language Models with Object Identifiers.

Haifeng Huang, Yilun Chen, Zehan Wang, Rongjie Huang, Runsen Xu, Tai Wang, Luping Liu, Xize Cheng, Yang Zhao, Jiangmiao Pang, Zhou Zhao

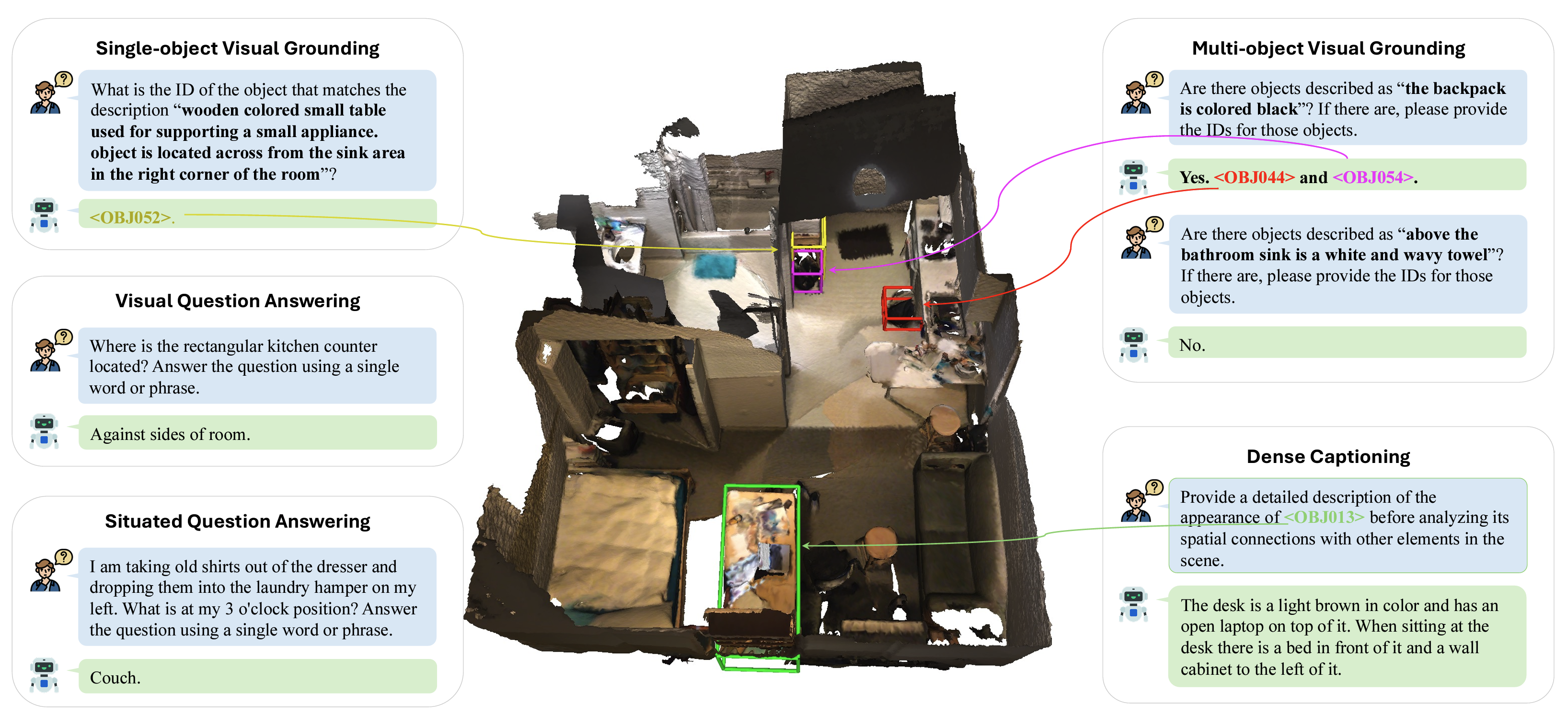

Grounded 3D-LLM with Referent Tokens.

Yilun Chen*, Shuai Yang*, Haifeng Huang*, Tai Wang, Ruiyuan Lyu, Runsen Xu, Dahua Lin, Jiangmiao Pang.

- Grounded 3D-LLM establishes a correspondence between 3D scenes and language phrases through referent tokens.

- Create a large-scale grounded scene caption dataset at phrase-level.

Chat-3D: Data-efficiently Tuning Large Language Model for Universal Dialogue of 3D Scenes.

Zehan Wang*, Haifeng Huang*, Yang Zhao, Ziang Zhang, Zhou Zhao.

- Chat-3D is one of the frist 3D LLMs.

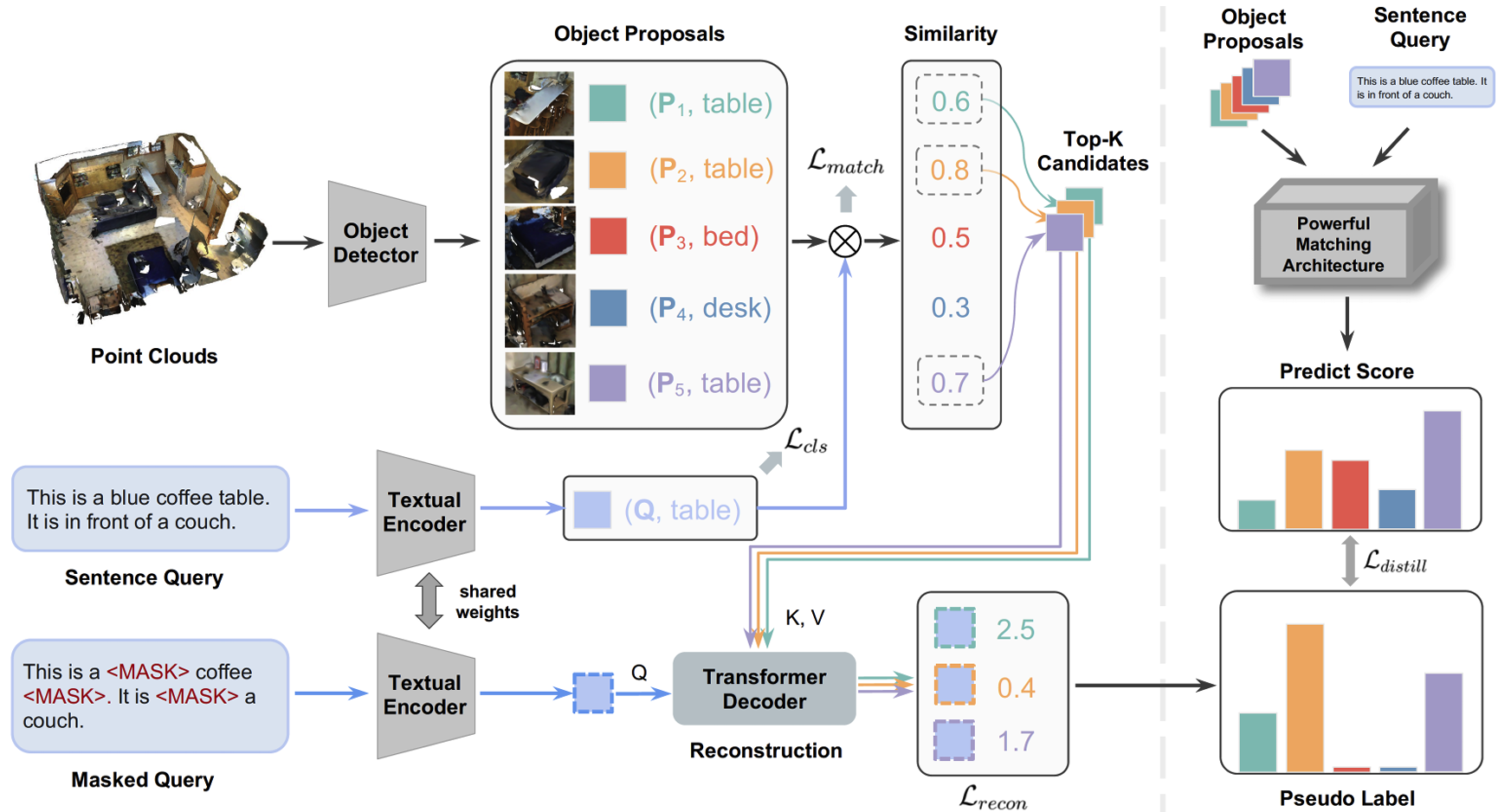

Distilling Coarse-to-Fine Semantic Matching Knowledge for Weakly Supervised 3D Visual Grounding.

Zehan Wang*, Haifeng Huang*, Yang Zhao, Linjun Li, Xize Cheng, Yichen Zhu, Aoxiong Yin, Zhou Zhao

- The first weakly-supervised 3D visual grounding method.

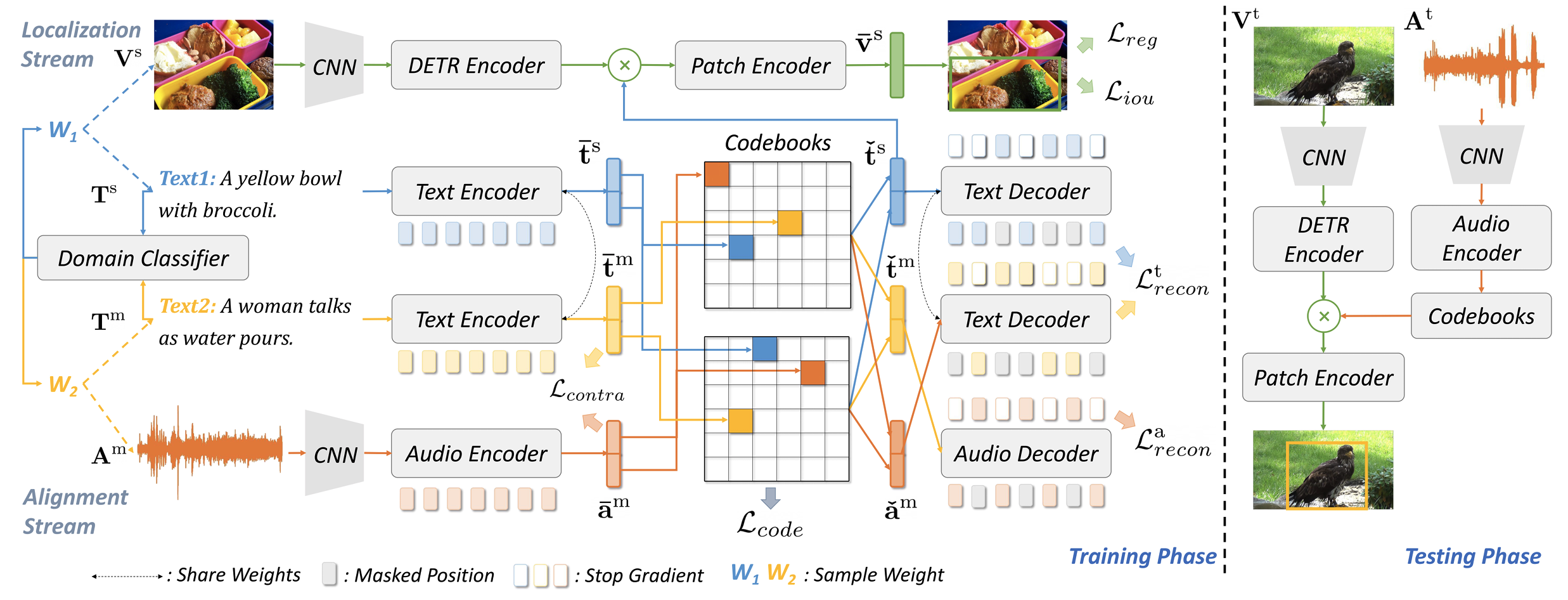

Towards Effective Multi-modal Interchanges in Zero-resource Sounding Object Localization.

Yang Zhao*, Chen Zhang*, Haifeng Huang*, Haoyuan Li, Zhou Zhao

- A method for sounding object localization without training on any prior data in this field.